The Creature Server is a fairly complex soft real time application that is the heart of my animatronics control network. It’s written in C++ and works on macOS and Linux, but the primary target is Linux.

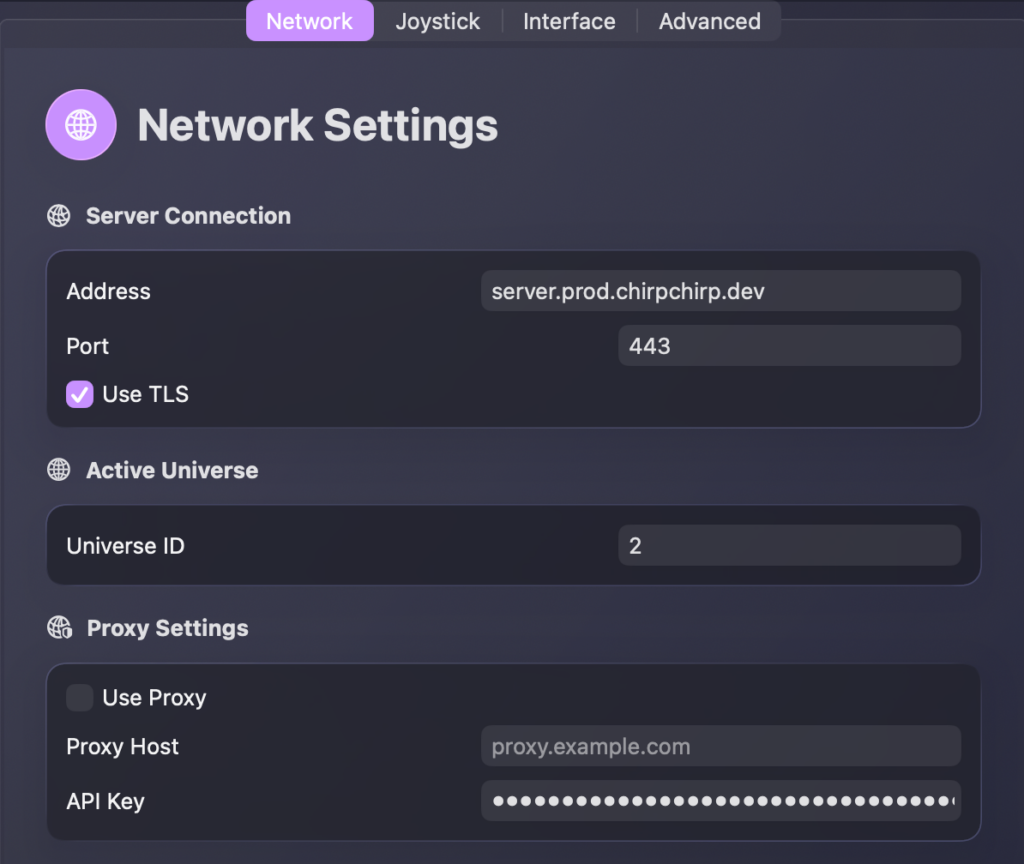

The Creature Console and server communicate over a RESTful API and a websocket. Because moving animatronics is a real time operation, it’s not designed to traverse anything other than a local LAN. I only use Ethernet to keep the latency low and jitter to a minimum. While the primary use is on a LAN, I do have a proxy server (the RESTful API and the WebSocket) on my network that exposes it to the Internet, but it’s locked behind an API key.

The server itself is stateless. All state is maintained in the database (MongoDB).

Event Loop

The heart of the creature server is an event loop. Each frame is 1ms. (1000Hz)

It maintains an event queue internally, and on each frame it looks in the queue to see if there’s work to do. If there is, it performs that work. If there’s not, it sleeps and waits for the next frame.

When an animation is scheduled, each frame of animation is put into the queue with the spacing that the animation was recorded at (usually 50Hz, or 20ms per frame).

This makes the creature server soft real-time, since it’s using the wall clock as the pacing, but overrunning a frame only results in time being dilated, not a crash or a pre-emption of the work. It does not make sense to preempt the work, since it would leave the creature in an odd state. It’s better for time to dilate slightly instead.

Communication

The network communication is pretty complex, so rather than explain it here, I’ve dedicated an entire page to it.

Lip Sync Data

The server is where lip sync data is created. Once a sound is uploaded to the server, there are several APIs to generate the lip sync data from the dialog.

To accomplish this, I use a lightly modified version of Rhubarb Lip Sync. My modifications are not to the application itself, it’s to allow me to make a Debian Package of the application for easy distribution to the server (and other devices where I’m writing code).

I used to create the lip sync data manually from the command line and then overwrite one of the tracks in an animation when it was being created. Moving this functionality to the server has taken a lot of the toil out of building animations.

Voice Generation

All voice generation is done via ElevenLabs. Each creature has their voice settings recorded in their Creature Definition file, and the server uses it when calling out to the ElevenLabs API.

Legacy Stuff

The current version of the Creature Server is running on some beefy hardware located on my network. As my use of animatronics grows, and the amount of processing I ask the server to do, it’s grown beyond what can run on a Pi comfortably.

When it was running on a Pi, I made a cute hat for a Pi running the server! The LEDs are connected via GPIO pins on the server, and are triggered via events in the event loop. (Turning them on and off is scheduled like any other event.)