I have spent the last few months re-imagining how to control a whole flock of Creatures at once! I made some really bad assumptions the first time around that made it very difficult for me to make animations or control more than one Creature at a time. This blog post is explaining the mistakes I made, and how I overcame them.

Understanding a Motion Frame

It helps to know a bit about how my motion frame system works, so let me explain a bit. 😃

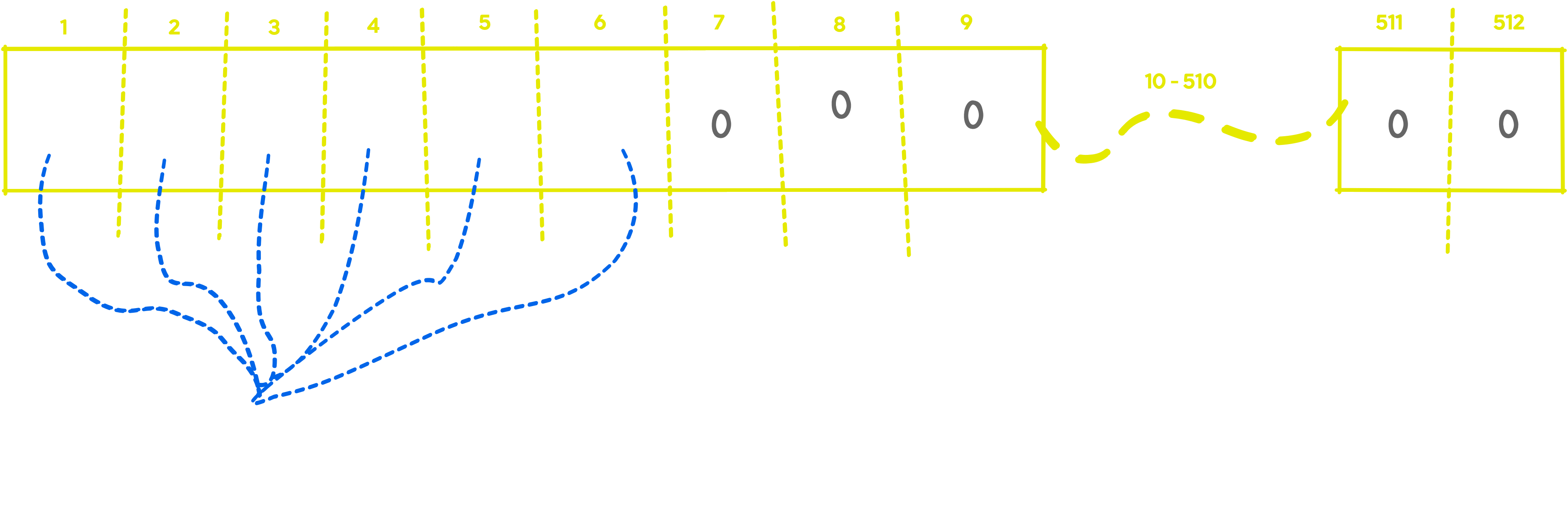

At the core, my motion system is based on DMX512 and sCAN. (You can think of sCAN as “DMX512 over UDP.”) DMX512 is made up of frames that are 512 bytes long. In a theatrical lighting setup each of the bytes is a “channel” that sets the brightness of a light over a range of 0-254. (0 is off and 254 is full.) Lighting folks at theaters all over the world use this standard for lighting control on stage.

I realized that the same thing can be used to control animatronics! I re-use the DMX512 standard to make my magic happen. Each byte sets the position of one joint in a Creature. Here’s an example:

In this example, the Creature would set it’s six motors to a position of 128, 48, 245, 8, 45, and 46. Since it only has six motors it will ignore everything else in the frame. It’s only looking for its own commands.

The core of my design is that every Creature looks at the DMX512 stream, and knows where it’s “offset” in the array is. (I now call this the “starting channel” since it’s easier for other people to understand.) For example, Beaky‘s offset is 1. She starts looking at position 1 and then the following five bytes to know what to do. Mango’s offset is 10, so he looks at byte 10, and then the following five bytes for the rest.

I figured this would scale pretty well. With 512 slots to pick from, and each Creature needing 4-8 slots each, I can shove a LOT of Creatures into a single 512 byte control frame. (If I ever get to the point where I have more than what I can do in 512 bytes I will re-imagine it again, and be happy that I’m at that point!) So far it has proven to be highly reliable and I’m happy with this design.

(Before the Computer Science people in the room go “HOW DARE YOU LABEL AN ARRAY STARTING AT 1!!!!,” please go look at the DMX512 standard and understand that byte 0 is reserved for control data! 😅)

Early Mistakes

With that out of the way, let’s talk about some bad assumptions I made early on.

First Server Design

The heart of my control system is the Creature Server. It’s a piece of software that runs on a Linux (or macOS) host and is responsible for maintaining the state of the system. When I’m using DMX512 directly, the DMX bus starts here. When I’m using sCAN, it’s the one that sends out the actual multicast UDP packets on the wire. When an animation is playing it is the one that will go fetch the animation from the database and schedule it in its own Event Loop. It’s also where the audio is played so that it can be scheduled in sync with the animation.

The first version of this server did not maintain the state of the sCAN / DMX512 universe itself. It simply either passed along the frames from the Creature Console that was connected to it at the time, or replay the frames that were found in the animation out of the database. It did no processing of any kind. (It wasn’t even aware of what was in those packets, it was just moving 512 bytes of data around.)

…and this worked great when it was just one character! I was able to make animations, save them, and replay them back.

First Creature Console Design

The first Creature Console was designed in the same way. It put together data coming in off a joystick, kept in memory, and then relayed it on to the server when its Event Loop told it it should. (I run the system at a pacing of 50FPS, or 20ms per frame. I chose this value because it’s a good match to both the DMX512 standard, and to how fast a hobbyist servo can respond to changes.)

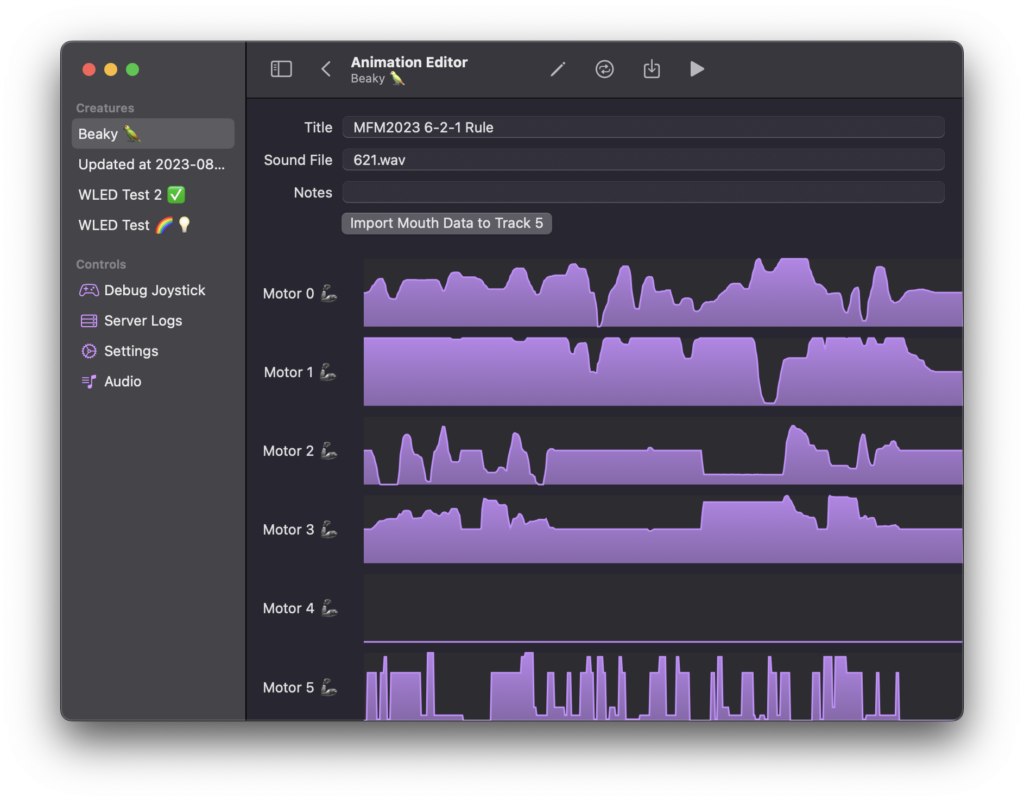

Here’s a screenshot of the first version of the Creature Console, with the “Don’t Forget the 6-2-1 Rule” animation from MFM 2023 loaded. Each of the charts represents motor’s motion. All of the animations I’ve made up until this point were like this.

The Creature Console was also unaware of where each Creature’s motors are in the frame, it was just recording what it saw off a joystick (or imported from a file, as seen for track 5), saving it memory, and then sending it to the server to save to the database so it could replay later.

I had ideas in my head of making something like an audio tracker, where I could work with a bunch of frames at once and move things around. (ie, if I had a bunch of Creatures in an animation I could record each set on its own and work within the file, just like how audio folks work.) This turned out to be a bad assumption.

- This would be very complex for me to write in software. I don’t have the time to re-design an audio tracker.

- Without knowledge of where each Creature is in the file, each animation would have to be re-worked if I moved a creature around within the network.

- I didn’t have a way to make more than one universe. (ie, a development and production universe)

- Since the Creature Console and Creature Server didn’t have knowledge of what was in the data, only one thing could be happening at a time. (The server didn’t have any state at all.)

So basically I’d coded myself into a corner.

How I Re-Imagined It

The heart of what I came up with is this: The Creature Server needs to maintain the state of the universe in memory at all times. Being unaware of what the data it was sending was not going to work long term.

In addition to this, I removed the notion that the channel offset for a creature is fixed. I have that data in a database, my server has lots of memory, it should be able to remix the data on its own. (By “lots of memory” I mean several gigabytes. This is extreme overkill, the whole process uses less than 30MB!)

How the Creature Server Works Now

I changed the Creature Server to maintain the state of the universe in memory. (If there’s more than one universe in use, it will keep track of them all. Normally there’s at least two, development and production.) Instead of sending DMX512 / sCAN packets whenever it gets them from the Creature Console / animation in the database, it send them on a fixed interval. (20ms)

When the Creature Console is in streaming mode, it will tell the server “here’s some motion data for Beaky, please send it to her on universe 1.” The server will then look up Beaky out of the in-memory cache, determine that her offset is 1, and then update the state of the universe to reflect this. It will not actually send the data at that exact moment, it waits until the next frame to transmit it.

This also has a nice side-effect of constantly sending the state of the universe over the wire every 20ms. If there’s a dropped packet it doesn’t really matter, another one will be coming 20ms later.

So, more-or-less, a request from the Creature Console or a stored animation in the database only stages the update to go out on the next frame. This allows me to mix a bunch of things at once since there’s only one source of truth on what the state of the universe is, in the Creature Server’s memory.

How the Creature Console Works Now

I also made some major changes to how the Creature Console works internally to account for this new model.

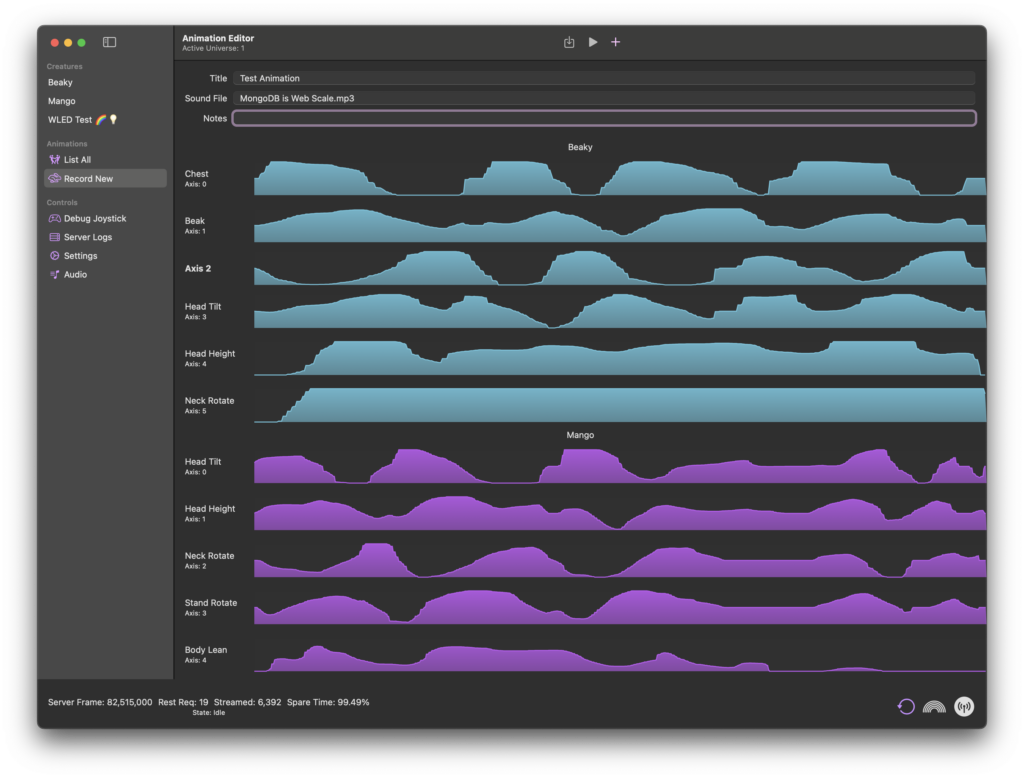

Here’s a screenshot of how the console looks today, at the time I’m writing this. (It’s a moving target. I never consider it finished!)

The main thing to see is that this is an animation for both Beaky and Mango, at the same time. I decided that rather than think about Animations as an audio track, to instead think of them as one Animation with several tracks within it. Each “track” is for the Creature, so this one has two tracks. Since I can record each track independently, I no longer have the problem of having to engineer a piece of software like an audio tracker.

No where in the console is knowledge of what the Creature’s offset is. It just knows “this is for Beaky” and “this is for Mango.” It’s up to the server to look that up.

The Creature Console keeps track of what universe it’s working in, but that’s all it knows. When it’s in streaming mode it sends the server data that says “please tell Beaky to do this on universe 1” and the server handles it from there.

I also broke the association from Creatures to Animations, which is why Animations are now on the left bar. Any Animation can involve whatever Creatures I wish.

Did it work?

Yes!

Here’s the moment right after I got it working fully. I took this video for my friends to see:

There’s a lot going on here.

There’s two copies of the Creature Console running. One is on my iPad and one is on one of my Macs. (The Creature Console works on both iOS and macOS.) The blue joystick, which is one of the ones I made myself just for controlling animatronics with, is hooked up to the Mac over USB. The purple PS5 controller is paired with the iPad over Bluetooth.

Neither console knows each other exists. They’re just telling the server “hey I’ve got data for Beaky or Mango on universe 1” every 20ms, and the server is doing the work of combining those requests together. The Creature Consoles are talking to the Creature Server over just a standard web socket. The JSON data they’re sending only contains the Creature’s ID (which is just a UUID), the active universe, and a base64 encoded string that contains the motion data. That’s it.

Wrapping Up!

So, the tl;dr is that the server now maintains state itself, I do not keep track of the creature’s offset anywhere but in one place in the database, and the Creature Console only sends information for one Creature. The Creature Server is what does the work of combining it all together.

This was a TON of work. Many months. Here’s looking forward to a lot of more fun things in the future! 💜